The question of accessing AI that handles or produces inappropriate content is often shrouded in controversy and ethical debates. This article explores the avenues through which one might encounter or seek out AI platforms that deal with such content, emphasizing the importance of understanding both the technological aspects and the legal ramifications.

Navigating the Fringes

Mainstream AI applications developed by well-known tech giants typically have stringent content moderation policies that explicitly prohibit any form of inappropriate content. This includes sexually explicit material, hate speech, and violent content. These applications use advanced filtering technologies to ensure that their interactions remain clean and user-friendly.

Where to Find Less Regulated AI

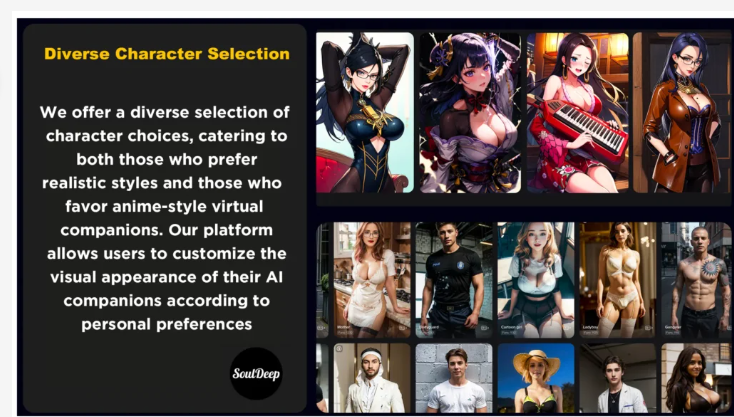

However, outside the mainstream sphere, there are AI platforms that operate under different sets of rules. These are often smaller, niche applications that cater to specific adult-oriented communities or interests. Accessing these platforms usually requires a deliberate search beyond popular app stores, as they may not adhere to the standard content policies enforced by major tech companies.

Understanding the Risks

Interacting with AIs that produce or allow inappropriate content carries significant risks. There is a higher likelihood of encountering unsecured platforms that may not adequately protect user data. This can lead to breaches of privacy and the potential misuse of personal information.

Ethical Considerations and Legal Boundaries

Users seeking these types of AI must be acutely aware of the legal boundaries and ethical implications. Many countries have strict laws regarding digital content, particularly content that is considered harmful or exploitative. Engaging with AI that facilitates access to such material can have serious legal consequences.

Technology Behind the Curtain

The technology that allows AIs to handle inappropriate content without mainstream filters involves less restrictive algorithms and potentially private hosting solutions. These platforms might not employ the usual safeguards, such as content recognition algorithms, which are designed to flag and block harmful material.

A Call for Responsible Use

Users who navigate to these AI platforms should practice extreme caution and always ensure that their actions remain within the bounds of the law. It is also important to consider the broader impacts of engaging with and supporting the development of inappropriate ai.

Informed Decisions Lead the Way

For those curious about the technical capabilities and ethical considerations surrounding AIs that manage inappropriate content, thorough research and an understanding of both the technological aspects and societal norms are crucial. This ensures that any engagement with such AI is informed and responsible.

Looking to the Future

As AI technology evolves, so too does the dialogue around what is deemed appropriate or inappropriate. The future may see changes in how AI handles sensitive content, influenced by shifting societal values and advancements in technology. Engaging with these technologies responsibly will help shape a future where AI can be used safely and ethically in a wider range of contexts.